Yes!

Most definitely— I am finding this thread fascinating.

Yes!

Most definitely— I am finding this thread fascinating.

Nice to meet you, too

And totally agreed about continually questioning our processes – this is a vital part of any project that wants to be successful!

Stories are very important (as has been discussed on this thread) and can take many forms. I am glad we are keeping an open mind as to what qualifies.

nice

glad we are on the same page :).

Feature, not bug

Many comments by staff are not a problem at all. Reason: they typically do not get coded by Amelia anyway. They are in large part encouragements and requests for clarifications. They are a major part of the motivating mechanism that @Costantino is searching for. So, the ethno analysis still represents what the community thinks and does – and there are hundreds of comments written by the community.

Pro tip: do not think in shares (60% of the comments are written by the staff!). Think in absolute numbers (the community has written 600 comments and 300 posts!). As with any data problem, discarding stuff is fairly easy, whereas getting it is expensive (and slow). If you can bring Guy 500 more comments and 1-200 posts, and ScImpulse can do the same, we are good.

UPDATE – I checked again my script, and found there was some double counting in team comments (really it’s a MySQL known issue, that you can get rid of via code). The data as of now are:

This is important ethnographic work

We should remember that a large amount (I would venture to say 60%  ) of ethnographic data is generated through interviews with research participants. And what are interviews if not “encouragements and requests for clarifications”? The ethnographic process assumes a person (or in our case, people) asking participants more in-depth questions, prompting them to think more deeply about things they might take for granted as parts of their daily process. If we are doing collective ethnography, I see the work that the team does as important ethnographic work.

) of ethnographic data is generated through interviews with research participants. And what are interviews if not “encouragements and requests for clarifications”? The ethnographic process assumes a person (or in our case, people) asking participants more in-depth questions, prompting them to think more deeply about things they might take for granted as parts of their daily process. If we are doing collective ethnography, I see the work that the team does as important ethnographic work.

Also on the subject of stories… I was reminded of this quote from anthropologist Michael Fischer (in a 2015 American Ethnologist article):

“Stories are not just stories of, but stories for. They are ways of thinking about how we want to build communities of the future, for ourselves as well as others…they provide the mental tools as well for sur-vie”

Thanks for the updates, I just got lost in the last scentence: “If you can bring Guy 500 more comments and 1-200 posts, and ScImpulse can do the same, we are good.” What does that mean?

Data is a very powerful and (and very flexible) indicator, indeed. Talking earlier about meta topics, I am drafting another separate post for some questions around how we outline our lessons learned. Thanks again!

About the last sentence

Sorry for cloudy writing. It means this: Guy and his group at UBx are aggregating ethno data into and crunching it. For now, they have the 322 posts and 1,442 comments (of which 840 written by community members – the numbers keep changing as new material rolls in) on edgeryders.eu. Most of these (almost all the comments) are the result of engagement done by Edgeryders.

What I am saying is: WeMake and ScImpulse should each do engagement activities that result in 500 community-authored comments of reasonably high quality. In this way, we would have roughly 800 + 500 + 500 = 1,800 community authored comments, perhaps 3,000 comments overall, and we would be good. “Good” means we have enough high-quality content to mount a large scale, network powered ethnography and see if (a) our method works at scale; and (b) how communities go about inventing and deploying care services. This is consistent with the added value of our project (section 1.3.6 of the proposal).

You can execute on our methods, or try something else. You can do it on edgeryders.eu, or anywhere. The comments could be reaction to posts, like here, or to schematics or videos of instructables, as you prefer. But that. I would say, is your goal (see also section 1.1.3 of the proposal).

qualitative vs quantitative?

Thanks @Alberto for the update, just wondering about any qualitative criteria that we have besides the numbers? As with the approach of doing activities on the ground then transferring the stories narratievly on ER, where 80% of those will be written by staff, and not surprisngly a good chunck of comments will be by staff as well. As long as the number is sufficient, then the metric is still OK? There are some cases studies around openIDEO, with a qualitative analysis that involved interviews. I wonder if a brief booklet analysing the experient on ER will be compiled at some point? Thanks!

the meaning of the conversation - and how to

“Putting a project on a website does not qualify as collective intelligence: in the proposal, we have argued that online conversation around a project does.”

We all agree of the value of the conversation that can be “exploited” while is online and codable as in ER. Consider this well bought by me.

To me the central point is this:

how do we REALLY motivate people to overcome the friction in the process of writing on line?? What’s the gain in writing here on ER or writing on line in general? Of course these are not question for a black or white answer. We need to discover and levarage on the trade-off: as more value I can fell/exctrat as more “pain”/friction/effort I can accept.

Concerning some experiments:

So we need to have other working strategies (and than protocols and tools and how to …) that consider other human approach and use cases. Just to mentions some cases:

@Alberto IMHO this is what we need to takle in order to demonstrate not only that is possible to analyze the “data” when they are available, but we also have sustainable way to create the right conditions to foster the users creating these shared value, maybe considering this kind of approach for the whole process.

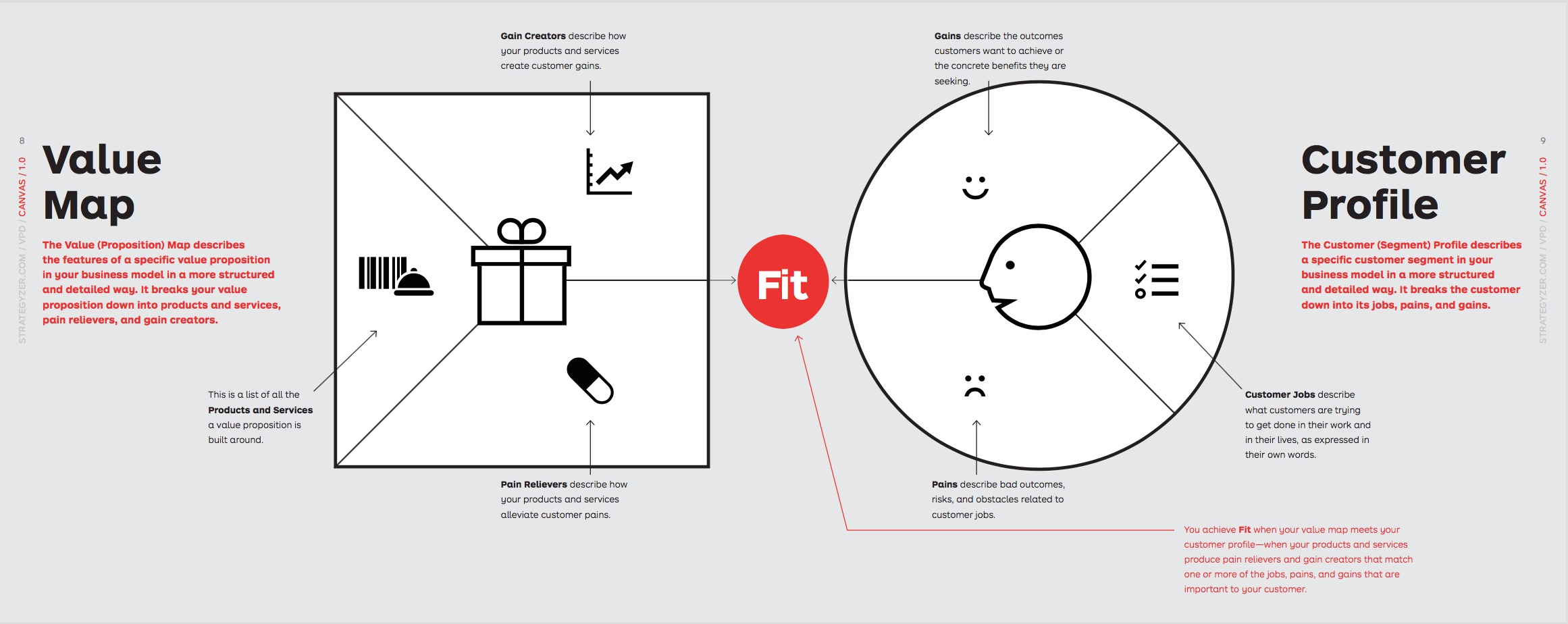

focusing on the user, what are:

the gain creators ?

the pain relievers ?

Self-selection, engagement and a pinch of innovation

Yes. The “what’s in it for me” question is, as always, the central one of engagement.

Your list of use cases is, as far as I can see, fundamentally splittable into two parts.

On case 1, I have no answer. Your experience is much deeper than that of any of us in the consortium. If you can’t solve it, no one can. Zoe is almost certainly in the worldwide top 10 experts on this issue. We go back to the Commission and say “not happening. Collective awareness platforms are not possible when the collective process is aimed at producing something other than a document.” It is a dignified negative result, still a result.

We have, however, met cases 2-7 many times. I have done my best to share this experience with you all, many times, but it seems it is so counterintuitive that you cannot bring yourselves to try it ![]() I think this is why Guy finds this conversation theoretical: practices that (sort of) work are already there, generating the data for this project, in exactly this context. Also, I am sure there are others. And yet, here we are, with 10 months at the most to wrap up the data generation phase, asking ourselves how to do engagement…

I think this is why Guy finds this conversation theoretical: practices that (sort of) work are already there, generating the data for this project, in exactly this context. Also, I am sure there are others. And yet, here we are, with 10 months at the most to wrap up the data generation phase, asking ourselves how to do engagement…

So, how do you want to proceed? How can we help? Shall I ask Noemi to give you a how-to presentation, maybe contextualized to the opencare experience? @johncoate gave one in LOTE5, but at the time opencare was just starting.

I am a bit worred, because it was our main promise that few people get this stuff, and we are among these few. This case is made in Section 1.3.2 of the proposal (Approach). The key passage is this:

We are also aware that, while successes get a lot of exposure, most attempts at mobilizing communities towards collectively intelligent outcomes fail.

OpenCare moves from the hypothesis that many unsuccessful outcomes are predicated on bad execution, in turn often caused by theoretical misunderstanding. Few would dispute that a smart community runs not only on ICT technology and infrastructure, but also on a web of correctly aligned incentives, shared values, social conventions, and common vocabulary. However, we propose that, in practice, most of the attention and resources of people and organizations wishing to convene smart communities are concentrated solely on ICT artefacts. The result is, unsurprisingly, empty platforms that host little meaningful conversation (Prieto-Martin et. al. 2012).

Getting online seen as a barrier (?)

On one side, I do agree with you, that users may refrain posting on a platform, even more if they’re asked to do so after an activity has ended. Earlier comments underline the usefulness of having this takeplace while the process develops. The language barrier might not be that a barrier, assuming the content can be ethno coded from any language (usgin multilingual tags, let’s say).

Bottom line, users must of course see the added value of posting online, otherwise it may indeed be quite hard to have them spend the necessary effort to do so.

I also agree that we could/should develop other working strategies to get the material we are after.

–

On the other, more pragmatic, side, we need to make sure we deliver what we promised to deliver. Looking at things from the different tasks UBx is involved in, it is true we can only put our methods and tools at work if we can harvest some data echoing the excnahges between people. Stories or conversations, I have no firm opinion on this question, as long as the content can be tagged I’ll be fine

–

What I hear is that this thread oscillates between these two sides, looking at the issue from a “theoretical” viewpoint; or from a more pragmatic DG CNECT viewpoint keeping in mind we are bound to project deliverables.

–

I might be wrong, I count on you guys to help me get a better understanding of this issue.

nothing teorethical at all

I’m not a researcher, I’m a practitioner.

There’s nothing meta in my comment. Just things to be analized and solved if we wanna make “more than homework”.

focusing on the user, what are:

the gain creators ?

the pain relievers ?