I participated in the recent NGI Forward workshop on Inequalities in the age of AI organized by Edgeryders. I was part of the discussion on scenarios for the regulation of AI, moderated by Justin Nogarede. The format was unusual, at least for me: a fishbowl discussion with four invited guests acting as icebreakers. These were Marco Manca @markomanka (Member of Nato’s Working group on Meaningful Human Control over AI-based systems); Seda Gürses @sedyst (Department of Multi-Actor Systems at TU Delft); Justin @J_Noga : and myself. The People in the fishbowl kicked off, but then we melted in the general discussion as others stepped up to make contributions and ask questions. This worked very well, and enabled a lively discussion.

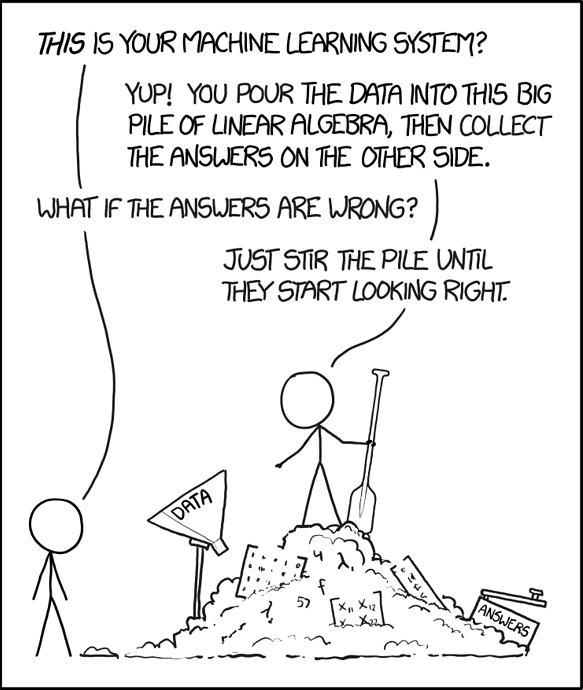

In general, the group felt the need to spend quite some time to get a grip on a shared idea of what AI actually is, and how it works, before he could discuss its regulation. Occasionally, the discussion veered into being quite technical, and not everybody could follow it all the time, even if we had an over-representation of computer scientists and digital activists. This is in itself a result: if this crowd struggles to get it, democratic participation is going to be pretty difficult for the general public.

We used Chatham House rule, so I in what follows I do not attribute statements to anyone in particular.

We kicked off by reminding ourselves that the new von der Leyen Commission has promised to tackle AI within the first 100 days of taking office. Brussels is mostly happy with how the GDPR thing played out, that is by recognizing the EU as the de facto “regulatory superpower”. The AI regulation in the pipeline is expected to have a similar effect to that of GDPR.

Participants then expressed some concern around the challenges of regulating AI. For example:

- A directive may be the wrong instrument. The law is good at enshrining principles (“human-centric AI”), but in the tech industry we are seeing everyone, including FAANG, claiming to adhere to the same principles. What we seem to be missing is an accountable process to translate principles into technical choices. For example, elsewhere I have told the story of how the developers of Scuttlebutt justified their refusal to give their users multiple-device accounts in terms of values: “we want to serve the underconnected, and those guys do not own multiple devices. Multiple device account is a first world problem, and should be deprioritized”.

- The AI story is strongly vendor-driven: a solution looking for a problem. Lawmaking as a process is naturally open to the contribution of industry, and this openness risks giving even more market power to the incumbents.

- AI uses big data and lots of computing power, so it tends to live in the cloud as infrastructure. But the cloud is itself super-concentrated, it is infrastructure in few private hands. The rise of AI brings even more momentum to the concentration process. This brings us back to an intuition that has been circulating in this forum, namely that you need antitrust policy to do tech policy, at least in the current situation.

- The term “AI” has come to mean “machine learning on big data”. The governance of the data themselves is an unsolved problem, with major consequences for AI. In the health sector, for example, clinical data tend to be simply entrusted to large companies: the British NHS gave them to Deep Mind; a similar operation between the Italian government and IBM Watson was attempted, but failed, because data governance in Italy is with the regions, and they refused to release the data. We learned much about the state of the art of the reflection on data governance at MyData2019: to our surprise there appears to be a consensus among scholars on how to go about data governance, but it is not being translated into law. That work is very unfinished, and it should be finished before opening the AI can of worms.

- AI has a large carbon footprint. Even when it does improve on actual human problems, it does so at the cost of worsening the climate, not in a win-win scenario.

- The Internet should be “human-centric”. But machine learning is basically statistical analysis: high-dimensional multivariate statistical models. When it is done on humans, its models (for example a recommendation algo) encode each human into a database, and then models you in terms of who you are similar to: for example, a woman between 35 and 45 who speaks Dutch and watches stand-up comedy on Netflix. Everything not standardizable, everything that makes you you, gets pushed to the error term of the model. That is hardly human-centric, because it leads to optimizing systems in terms of abstract “typical humans” or “target groups” or whatever.

As a result of this situation, the group was not even in agreement that AI is worth the trouble and the money that it costs. Two participants argued the opposite sides, both, interestingly, using examples from medicine. The AI-enthusiast noted that AI is getting good at diagnosing medical conditions. The AI-sceptic noted that medicine is not diagnosis-centric, but prognosis-centric; it has no value if it does not improve human life. And the prognosis must always be negotiated with the patient. IT in medicine has historically cheapened the relationship between patient and healer, with the latter "classifying " the former in terms of a standard data structure for entry into a database.

Somebody quoted recent studies on the use of AI in medicine. The state of the art is:

- Diagnostic AI does not perform significantly better than human pathologists. (Lancet, Ar.xiv)

- Few studies do any external validation of results. Additionally, deep learning models are poorly reported. (Lancet)

- Incorrect models bias (and therefore deteriorate) the work of human pathologists (Nature, Ar.xiv)

- There are risks that AI will be used to erode the doctor-patient relationship (Nature)

Based on this, the participant argued that at the moment there is no use case for AI in medicine.

Image credit: XKCD

We agreed that not just AI, but all optimization tools are problematic, because they have to make the choice of what, exactly, gets optimized. What tends to get optimized is what the entity deploying the model wants. Traffic is a good example: apparently innocent choices as what to optimize for turn out to have huge consequences. When you design traffic, do you optimize for driver safety? Or for pedestrian safety? Of minimize time spent on the road? Airport layout is designed to maximize pre-flight spending: after you clear security, the only way to the gate goes through a very large duty free shop. This is “optimal”, but not necessary optimal for you.

We next moved to data governance. Data are, of course, AI’s raw material, and only those who have access to data can deploy AI models. A researcher called Paul Oliver Dehaye wants to model discrimination of certain workers. Do do this, he needs to pool the personal data from different individuals into what is called a “data trust”. Data trusts are one of several models for data governance being floated about; the DECODE project’s data commons are another.

In this discussion, even the GDPR’s success appears to have some cracks. For example, Uber is using it to refuse it to give drivers their data, claiming that would impact the riders’ privacy. A participant claimed that the GDPR has a blind spot in that it has nothing to say about standards for data portability. U.S. tech companies have a project called Data Transfer, where they are dreaming up those standards, and doing so in a way that will benefit them most (again). Pragmatically, she thought the EU should set its own standards for this.

We ended with some constructive suggestions. One concerned data governance itself. as noted above, the MyData community and other actors in the tech policy space have made substantial intellectual progress on data governance. Were this progress to be enshrined into EU legislation and standards setting, this would maybe help mitigate the potential of the AI industry to worsen inequalities. For example, saying “everyone has a right to own their data” is not precise enough. It makes a huge difference whether personal data are considered to be a freehold commodity or an unalienable human right. In the former case, people can sell their data to whomever they want: data would thus be like material possessions. In this case, market forces are likely to concentrate their ownership in few hands, because data are much more valuable when aggregated in humongous piles of big data. But in the latter case, data are like the right to freedom. I have it, but I am not allowed to sell myself into slavery. In this scenario, data ownership does not concentrate.

Another constructive suggestions concerned enabling a next-generation eIDAS, to allow for “disposable online identities”. These are pairs of cryptographic keys that you would use for the purpose of accessing a service: instead of showing your ID to the supermarket cashier when you buy alcoholic drinks, you would show them a statement digitally signed by the registrar that says more or less “the owner of this key pair is over 18”, and then sign it with your private key. This way, the supermarket knows you are over 18, but does not who you are. It does have your public key, but you can also never use that key pair anymore – that’s what makes it disposable.

Further suggestions included legislating on mandatory auditability of algorithms (there is even a NGO doing work on this, AlgorithmWatch), investments in early data literacy in education, and designing for cultural differences: Europeans care more about privacy, whereas many people in Asia are relatively uninterested in it.

How is this all financed?

This event is part of the NGI Forward project Generation Internet (NGI) initiative, launched by the European Commission in the autumn of 2016. It has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 825652 from 2019-2021. You can learn more about the initiative and our involvement in it at https://exchange.ngi.eu.