I want to revamp this thread in view of a couple of disturbing developments I am seeing. They are not directly related to the “magic COVID app”, but they do resonate with some of the arguments we explored in this event.

1. Restricted freedoms in Asia-Pacific

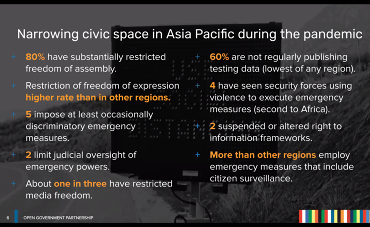

Earlier this week, the Open Government Partnership held this meeting. They found robust evidence that states inclined towards authoritarianism exploited the pandemic to increase surveillance and restricts liberties. Most countries in the region are also restricting the publication of data on the pandemic itself, presumably so that they control the narrative and keep their nations in a perpetual state of fear. Authoritarians love fear, because it makes government overreach more acceptable.

I am not convinced that this is only an Asian phenomenon. In my own native Italy, 19 regions out of 20 refuse to publish open data on COVID-19 ([thoughtful post by Matteo Brunati]

(Il governo (non è) aperto durante la pandemia: serve una spinta - Casual.info.in.a.bottle - Il blog di Matteo Brunati), in Italian).

2. Smart city infrastructure re-deployed to counter-demonstration surveillance in the USA

The city of San Diego, in progressive California, has 4,000 smart traffic lights. Which have cameras. Which store their footage on some server farm. Turns out San Diego police are now accessing that footage in search of material to incriminate Black Lives Matter protestors. The whole thing had been predicted by the EFF in 2017, and this lends some strength to slippery slope-type arguments whenever someone wants more monitoring and more data on the spaces where humans live.

We are still very far from a bottom line on the consequences of SARS-COV-2 on the Internet and how humans use it. But the shadows are deepening, and I fear that the opportunists thinking the pandemic is a good excuse for getting more surveillance tech in place… are mostly right.