Hi John!

Can’t believe I forgot that in the original post.

Interesting concepts. But, as far as I can see, a centralized proprietary platform. Quite the opposite of distributed and free software or open source. So, good luck, but not for me.

@fjanss, your assumptions about what we are creating are incorrect. We are NOT building a centralized platform. While it is true that we have not built in the DLT aspect of our system yet, we will launch with a DLT in place. (We are 10 months out from the planned launch and plan to remain fluid as to the specific implementation until Q2.) Also, we will have open source aspects to our project. We will not open source aspects of the system that could enable one of the Internet Platforms to put us out of business before we get started. Good luck

This looks really interesting. I submitted to the alpha. I definitely do think that this needs to be made open, at least eventually. This would help to make it grow larger and also stop any one platform from controlling this information.

@zvanstanley, thanks for the feedback. We are using the annotation standard and JSON-LD.

For this to create real value wrt collective intelligence, we need to ensure the following:

- All bridges have integrity, meaning that the relationship between the two content snippets are correct. We envision a validation process for this, whereby three approved validators have to agree that the relationship is correct.

- The system does not get filled up with fake accounts and bots.

Without integrity, there is no value to a knowledge map.

I’m open to conversations about how this can be achieved in an open framework.

I’d also recommend checking Veeo. Similar value propositions and a very friendly and welcoming team of creators!

Hey John

We are starting a mapping project for COVID19.

The COVID19 Mapping project aims to crowdmap the online information ecology for the COVID19 virus including second and third order effects of the virus.

The project utilizes Bridgit ‘s mapping capability to catalyze a Massive Online Research Collaboration on COVID19. Massive Online Research Collaborations HELP researchers connect and interact with other researchers on any webpage WITHOUT any coordination EVEN IF they don’t know each other or speak the same language WITH a self-generating interactive knowledge map. AI will monitor the knowledge map and send alerts about emerging threats, false news, and misinformation.

Outcomes of the project include a first-generation interactive knowledge map, a shared context for collaboration and communication, a cohesive community, and faster better answers that accelerate insight, innovation, and learning.

Register online at https://bridgit.io

Thanks! Veeo is cool. Would love to collab with them. Do you anyone there?

Ambitious project. Got any screenshots you can share so we can see what this kind of mapping might look like?

Hey John

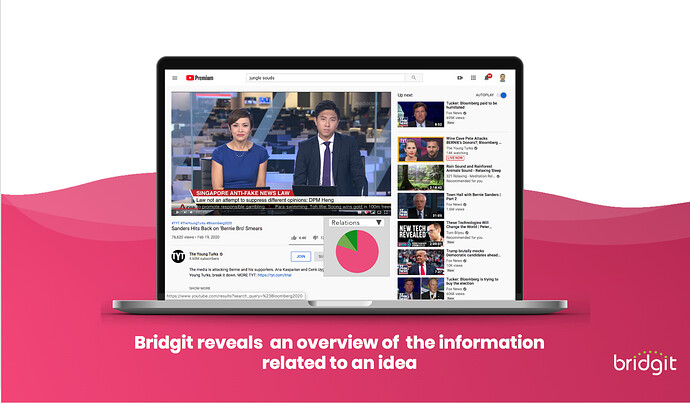

Here is a mockup of how the overlay looks

This is what the map view looks like:

10%20PM|690x390 !

This is how bridges assist in sense making.

23%20PM|690x392

It may more sense to see the entire deck:

Would love any feedback.

p.s., You have a most interesting background

I’ve been in touch with the Veeo founders. I’ll let them know of this conv

Awesome. Thanks.

So it depends on consistent and widespread tagging? It’s either that or machine learning I guess.

Both. Machine learning can suggest tags and connections. But there will be a human-in-the-loop for the foreseeable future.

Back in the 90s I started and managed the first large news website (sfgate.com). We had the challenge of supplying readers with news from multiple sources (2 newspapers, the AP feed, a TV station, original content and a couple of other sources). None of these orgs or feeds communicated with each other in any way, and the “slugs” or metadata, were always peculiar to their one source.

]We wanted to present the news according to what it was about, not just where it cam from. This was especially important with the limited screen real estate on a computer, plus Google had just started out and was nothing like the behemoth we have known for the past 20 yrs.

Our only meaningful option was machine learning. What we did was create with the newspaper librarian a news category system and I hired her then on the side to tag a large number of current stories for a period of time so we could get a good sample.

But for machine learning, that is not enough. One has to have a large amount of data to run it against in order, in this case, for the machine to decide what a news story was really about with any meaningful accuracy. The bigger the sample, the more accurate the result. But, since we were at a newspaper with electronic archives going back decades, we were able to accomplish it so that our system was highly accurate. It was the first - and only - one of its kind. Before Google News or the Apple News utility, we were supplying anyone interested with a lot of daily news that you could see either by source or subject. This is a big reason why we “punched above our weight” in the news world. Yes the New York Times within a few years had 4 times the traffic we did, but they spent 20 times the money to get there.

But it was only possible to get that accuracy by basing it on a huge - truly huge - amount of data. Without that, you have to tag.

Okay, Thank U very much