TL;DR: Our current strategy for making Graphryder multi-tenant is not working out as well as I had hoped. This is a lengthy post describing the problem, which ends with my advice on how to solve it in the short term and in the mid-to-long term.

Background to multi-tenant Graphryder

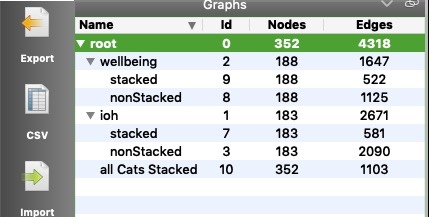

Graphryder’s (“GR”) current architecture can only handle one SSN (Semantic Social Network) graph at a time. This limitation propagates through many levels of the architecture of Graphryder.

- GR’s database, the Community edition of Neo4j, can only handle one graph per running instance of Neo4j.

- The GR API is responsible both for building the Neo4j database and for creating Tulip graphs to serve to the dashboard based on the Neo4j data. This architecture assumes that the Neo4j database only contains data for a single project. Furthermore, GR’s API can only be linked to a single Neo4j database.

- GR’s dashboard can only be linked to a single API.

We have long wanted to make Graphryder multi-tenant – able to handle data from multiple SSN-graphs at all of the levels above to lower the overhead of setting up a new SSNA-ready project. The hard part of that is making the GR database and API multi-tenant, and that is what this post will focus on.

An overview of the challenge

The architecture is currently very reliant on the database only containing a single SSN. To describe why, I will quickly give an overview of how the Graphryder code is structured:

-

importFromDiscourse.py is the module that builds the Neo4j database. Classes from this module are called from the settings update routes. This is the class that is called to rebuild the database from scratch from a given set of posts, usually loaded through a tag. Currently, it is only reliable when it is called to rebuild the database from scratch.

-

routes are the libraries of classes executed when different routes to the API are called. There are approximately 15 different libraries, totalling about 2000 lines of code. Most of these classes contain Cypher (query language for Neo4j) queries to return subsets of the data that has been loaded into the Neo4j database. All of these queries assume that the database only contains a single SSN graph.

-

graphtulip is a library of 10 classes responsible for building the Tulip graphs. These are the data files describing how to draw the graphs on the dashboard. It is about 1700 lines of code in a a few files in the graphtulip module of the GR API code. The heavy lifting in this code is done with Cypher queries. All of these queries assume that all data in the database is relevant to the SSN Tulip graph. These classes are in turn called by the tulipr classes in routes.

Approaches

I will now go through a few different approaches for how to make Graphryder multi-tenant. We will carry a few assumptions with us on what we mean by that:

- We want to be able to store SSN graphs for multiple projects on a single instance of Neo4j

- We want a single instance of the API to be able to handle building multiple SSN graphs on Neo4j from separate sets of tags on the platform

- We want a single instance of the API to serve Tulip graphs and other data to the dashboard for multiple projects

Approach 1: Multiple databases on one Neo4j instance

The easiest way to achieve multi-tenancy would be to have the API connect to multiple databases on the same Neo4j instance, and then configure the routes to keep track of which database is being called. Most database-software offer to run multiple databases on the same running instance. Neo4j has not offered this functionality until mid 2019, when it was made available in the Neo4j Enterprise Edition 4.0 pre-release. However, the enterprise version is proprietary and requires a lincense.

According to our FOSS principles and operating procedures, this is probably a no-go. However, it’s important to note that we would very likely be given a free licence if we asked for one. Startups with <=50 employees can contact Neo4j to receive a free Startup License for Neo4j Enterprise. And if we ever grew out of that bracket, the cost of a licence would probably not make a big dent. In this case, it’s our idealism and principles that keep us from going with the simplest solution to the problem. I think those principles are sane, especially considering that investing in software that depends on proprietary software creates a very uncomfortable lock-in.

It should also be noted that even if we went with this solution, it would still be risky, as it also means upgrading Neo4j to a much newer version which would most likely break some of our code.

Approach 2: Get rid of Neo4j

Neo4j is not the only graph database out there. There are some open source alternatives. However, most of them don’t support Cypher, which would mean rewriting all queries in some other query language like Gremlin.

I recently found a very promising alternative, called RedisGraph. It is a module for the Redis database, which is a technology we already use for Discourse. Amazingly, it does support Cypher. It comes with some challenges though:

- RedisGraph is very new, and only matured to its first stable release in late 2018.

- While RedisGraph does implement Cypher, it does not implement the entire range of functions that Neo4j does. Some of those Neo4j exclusive functions, like shortestPath, are used by GR API and would have to be replaced with something else.

- There is no guarantee for how much of our Cypher code would work with RedisGraph, and we wouldn’t find out until we had invested significant work.

Because of the above, I would say that while it’s very promising to use Redis for both the SSN graph and the primary data cache from Discourse, we should not go down that path until we are ready to commit significant resources to rewriting the entire API if need be. It’s months of work, and should ideally be split among a team. It’s the sort of work we could do if we got a grant or investment specifically for the SSNA tech stack.

Approach 3: One database with project SSN sub-graphs

If we’re stuck with Neo4j for now, there are ways to fake having multiple databases. In the Neo4j developer community, there are various recommendations for how to create sub-graphs with labels or new relationships.

This is the approach I proposed and that Matthias asked me to start working on. We agreed on that I’d let him know if it looked like it would be more than about two weeks of work to get it done. After working on mapping that out and some early code trials, I no longer think that it’s possible to do in this timeframe.

Problems with Approach 3

This is what I have come to realise about the chosen approach:

Importing Discourse content is the easy part

Building subgraphs is easy. We would simply have to add a property or label to each node and relationship on import, and when updating we would just delete all objects with that property or label and import again. This basically means reworking importFromDiscourse.py and making it capable to accept hard-update calls for the different SSN graphs.

There are more calls to Neo4j than expected

I had not made a correct estimate of how many different calls are made to the Neo4j database. I supposed that the graph data was front loaded in a few calls early on. This is not really the case, there are in fact plenty of calls to Neo4j that happen at various times while interacting with the dashboard that I was not well aware of at the time.

Most of these queries are probably not too hard to update with a clause to only consider objects with a property or label passed to the route. There are just very many of them.

There are a lot of routes to reconfigure

There are more routes than I thought that need to be configured to be aware of multiple graphs. There is a lot of junk in the code and a lot of routes to consider. When I made my early estimate I thought that most of these were not used, but it turns out that they are, in places where I hadn’t looked closely enough.

Again, this is not hard, just tedious and time consuming.

The patterns are not standardised

The code is not as standardized as I thought. It is pretty clear that different people have implemented different practices in the modules they have developed. One example of this that makes things more complicated is that while the calls to Neo4j in the routes use pure Cypher, the calls in the importer and graphtulip sometimes use the py2neo library, and sometimes pure Cypher.

graphtulip is complicated

All of the complications up to this point are fixable with time and diligence. From what I can see, there isn’t really anything there that I don’t understand how to do. It would just take more time than I first thought.

Graphtulip is a bit of a different story. While working on this, I have realized that I don’t understand it well enough to confidently dig into it. I would have to first spend some time getting to know that library, which also has a lot of its own Cypher queries that look to be more complicated than those in the routes.

Since Graphtulip is the part of the code that prepares the graphs for the dashboard, GR is little use without it working flawlessly.

Bottom line: Time needed is at least x4 what is budgeted

All of these issues taken together, I think we are really looking at closer to 8 weeks rather than 2 weeks. Even if we had the cash, I don’t think it would be a reasonable investment into the Neo4j based GR. With that amount of money, I think it would be a better idea to just start working on porting GR to RedisGraph.

And now that we know that it is more complicated than we thought, I also think that putting such a big project on a single developer is bad practice. It would be money better spent to then have two people or a small team that can work together to avoid getting stuck and to cross-review.

What now?

These are my recommendations, pending approval from @matthias and advice from @alberto.

Short term: We don’t bother making it multi-tenant

For POPREBEL, I would recommend that we bite the bullet for now and run one new VPS per Graphryder. This will cost 30 USD per month per Graphryder install. This can also host the dashboard for its own Graphryder install. It is really quite trivial to set it up, if we accept that we will have a little cluster of VPS on Digital Ocean. I can take on the responsibility of running all VPS on Digital Ocean.

Time for me to set up a new GR VPS like I did earlier is not more than a couple of days, tops. I would need to fix a few things on the one I have already set up, but then we can use it as a template and just clone it when we need a new instance. Then all that needs to be done is to update the config files and configure the domain.

Mid-to-long term: We plan for a big reworking of GR

With the POPREBEL deadline of our back and a way to deploy GR with VPSs, we should start experimenting with RedisGraph and refactoring the GR API. I am ready and willing to make room for this during the first half of 2019, in a way that allows for some more experimentation and less of a rush.

Having GR build a graph in Redis opens up for a pretty exciting opportunity, which is to create a complete graph mirror cache of all the data on the platform, updated in real time. This is obviously a completely new feature, but if we wrote a Ruby module for Discourse that could interact with RedisGraph it is perfectly plausible that this could work. This means that we would be adding some powerful functionality, and not just duplicating work done with different tech.

Thoughts?