Masters of Networks 5: networks of meaning.

A hackathon on semantic data to explore collective intelligence.

About

Why

Society is a network. Societal change arises from the fabric of interpersonal relationships. People turn to each other to share vision, build or borrow technological tools, mobilize. They seek advice, help and moral support from each other. They exchange knowledge and share resources. They meet, interact, and work together. All societal change happens in social networks.

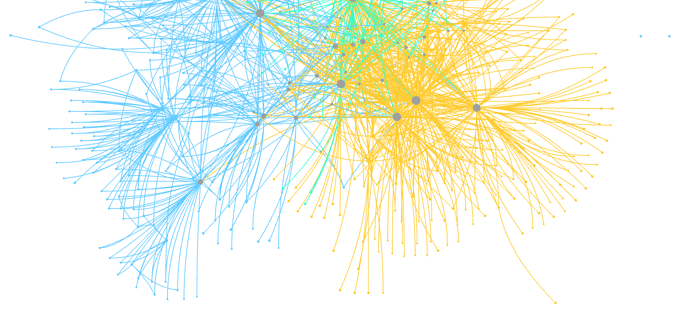

This ceaseless exchange is collective intelligence at work . The resulting networks of associations are its signature. We can use network analysis to understand this process, and perhaps find ways to improve upon it. Are you a social scientist, a policy maker, a civil society activist reflecting on a social process? Then thinking in networks might be a great way to generate fresh, relevant questions, and seek out their answers.

Human relationships between agents involved in societal change develop through dialogue. We can access it, and take part, through means like online fora, scientific articles, news. And dialogue, in turn, with every step creates associations between concepts and ideas. As it develops, it gives rise to networks of a different type. Their nodes represent entities that surface in the dialogue, like concepts, ideas, social agents. We call these semantic networks , networks of meaning.

What

We come together to find out how to use semantic networks to learn about societal change in the groups that generated them.

Semantic networks tell us how the different concepts connect to each other. Are there surprises? Do apparently unrelated concepts tend to come up in the same exchanges? Does this encode some kind of tension, some change in the making?

The fascinating part is this: by looking at the network, we can extract information that no individual in the network has. The whole is greater than the sum of the parts. Collective intelligence!

But how do you encode human dialogue in a network? How do you extract meaning from it? Does the structure of a semantic network contain some kind of “big picture” for the issue discussed in the dialogue that generated it?

How

We look at semantic datasets and build them into networks. We use open source software for network analysis. We then visualize and interrogate the network to see what we can learn. Our final aim is to prototype methodologies for extracting collective intelligent outcomes from human conversation.

We optimize for interdisciplinarity. We draw participants from at least two very different domains: network/data science and antropology/ethnography. But any expertise, academic or not, is very welcome, from engineering to history and art. Diversity trumps ability: the advantages of interdisciplinary collaboration outweigh the extra effort to communicate across our respective languages.

Who should come

Masters of Networks 5 is open to all, and especially friendly to beginners . Scholars of any discipline, hackers, policy makers, civil society activists, and so on all have something to contribute. In the end we are all experts here. We all are part of pushing for, and against, societal change, and all humans are expert conversationalists. There’s an extra bonus for beginners: networks are easy to visualize. And when you visualize them, as we will, they are often beautiful and intuitive.

Particularly welcome are people with an interest in the future development of Internet technology. Our data are especially juicy in that domain.

Agenda

Masters of Networks 5 consists of three sessions in three consecutive days:

Day 1: 28th of April: Kickoff

- 10:00-11:00 CET: meet everyone, an overview of the challenges and the datasets available (central room)

- Rest of the day: Work on your challenge, in group rooms or offline.

Day 2: 29th of April: Hack

- 10:00-12:30 CET: optional check-in (central room)

- Rest of the day: Work on your challenge, in group rooms or offline.

Day 3: 30th of April: Final Presentation

- In the morning: Work on your challenge, in group rooms or offline.

- In the afternoon: present and discuss your works (central room)

- In the evening: social hanging out and networking (central room)

Challenges

Visualization challenge

Create informative and beautiful visualizations starting from our data. Skills needed: domain expertise relative to the dataset(s) chosen, design, dataviz, netviz. Coordinator: Guy @melancon

- Its not only about creativity and beauty, it’s about interactivity – a map seen as a malleable object so you can squirk information out of it.

- It’s also about being able to specify graphical design from the tasks you’d need to conduct on the data and its representation on the screen.

- How is a node-link view useful? How would you intuitively like to manipulate, filter or change it at will when exploring it?

- Would you feel you need to synchronize the view with a bar chart on some statistics? A scatterplot to figure out if things correlate?

Interpretation challenge

2. Interpretation challenge . How many conclusions and hypotheses can we “squeeze” from the data? Skills needed: social research, ethnography, network science. Coordinator: @alberto

- Interpretation is at the core of the process. You play with data, you map it, and iteratively build hypothesis. In the end, you dream you would have provable claims.

- Semantic networks tend to be too large and dense to make sense of visually. Can we think of simple criteria to filter the data for the highest-quality content only (eg: only posts with a minimum number of comments, or of minimum length)? Does the filtering change the results? note: this question used to have its own challenge, now we are merging that into the interpretation one.

3. POPREBEL challenge . Coordinator: @amelia

- POPREBEL is a research project on the populist insurgence in Eastern Europe. Its principal investigator @Jan has proposed a challenge around making the most out of their own dataset.

Text mining challenge

Network analysis is cool, but you may have other methods in your toolbox: sentiment analysis, topic modelling or maybe word2vec? How about the narratives in traditional news media? Can we compare the reporting of news outlets on tech challenges to the discussions of hackers? Let’s experiment with different tools, text data and find answers to exciting social tech dilemmas!

Skills needed: Python and enthusiasm! Coordination: @kristof_gyodi, @mpalinski

And more. But we insist that every group has a coordinator, who takes responsibility for driving it, sharing the relevant material (examples: software libraries, notes for participants, pseudo code…). If we only have two coordinators, we’ll only have two groups. If you think you can lead a group, get in touch with us!

Data

We offer six datasets. Three of them come from ethnographic research: the other three come from text mining research.

Ethnographic datasets

Each of these datasets consists of a corpus of coded posts from online conversations. They are identical in structure, though different in that they come from different research projects and investigate different problems.

- The NGI Exchange ethnographic dataset (download page). NGI Exchange is an online forum, where hundreds of people exchange views on the next generation Internet.

- The POPREBEL ethnographic dataset (download page). The POPREBEL project explores the phenomenon of populism in Europe. This is a multilingual dataset.

- The OPENCARE ethnographic dataset (download page). The OPENCARE project explores what happens when health and social care is administered not by the state, nor by the private sector, but by communities.

| ethno dataset_ | posts_ | authors_ | annotations_ | codes |

|---|---|---|---|---|

| NGI Exchange | 3,935 | 311 | 5,551 | 1,096 |

| POPREBEL | 2,206 | 313 | 5,679 | 1,448 |

| OPENCARE | 3,676 | 270 | 5,769 | 1,609 |

Text mining datasets

- A Collection of tech media articles (download page TBA). 247k tokenized articles published between 2016 and 2020.

- A Keyword frequencies in popular tech media (download page). Dataset including i) frequency of appearances for all unigrams and bigrams in the texts; ii) average monthly change in the terms’ frequencies calculated by OLS regressions.

- The Co-occurrences of trending keywords in popular tech media (download page). Dataset for exploring the relationship between topics.

Info and registration

The hackathon takes place online via Zoom. Each challenge will be assigned its own room.

To register, please go here and fill the form. We will send you the Zoom link one week in advance, with a reminder on the same day.

I included a challenge that may be a good fit

I included a challenge that may be a good fit