Use this thread / topic for a running code review. We can talk about best practices in our call on Monday.

Codes to review during bi-weekly meeting tomorrow (19 Oct 2020):

'don't need to know how to use': this is a placeholder for right now to refer to how people feel like they don’t need to necessarily understand the mechanics of how things work in order to use them and expect it to function well and safety. For example, users shouldn’t have to know how search engine algorithms work to expect results to be accurate

'privacy as space': another placeholder code to add more granularity to how participants think and talk about privacy as a set of different spaces

making decisions: made this to disambiguate from decision making since it was getting overloaded. referring to when people are in the process of making decisions, or experiencing it, i.e., “we had to weigh all these factors in order to make the best decision for us” (=/= decision making: the state of decision-making, i.e., “we need to automated decision making process”)

guiding users: created to add more granularity to UX/design connections.

self-censorship: whenever people don’t say what they want to say because they fear repercussions, feel silly about it, etc. was gonna go with “chilling effect” but thought this would be more broad

more ontological codes:

being practical

being supportive

making accessible

functional sovereignty – is this the same as free-standing technology (code I have newly created)

contingency planning and potentially merging with disaster preparedness or mitigating risk / discuss how these all interact

I’m going through threads on how technology design process is ripe with bias that have potential harms. So far, only 1-2 annotations for most of these, but I reckon they’ll have more robust annotations by our next meeting when I’ve gone through more threads. Collecting them here as I think through finessing them:

On modeling and bias/assumptions

-

encoding values: I’m getting the sense this has been a general code for any “putting values into design” which we should now fork and make precise with some of the other codes below -

modeling the 'real world'+*expressing mathematically: These are forked from what I had last week asoperationalising the real world making generalisationsassuming objectivity-

*assessing model accuracy: We currently don’t have a code about accuracy??? we really should. I want the word “representation” in this code somehow…

On oversight

justifying purpose-

'confirmation bias': probs too jargon-y at the moment, consider ‘feedback loop’ and others -

the ability to explainwhich should almost always co-occur withhuman oversight techniques of mitigating bias

On impact

-

potential harm: though should i just merge this withunintended consequences? differential impacthigher threshold

Changes I want to propose:

- I changed

deploymenttothe rush to deploy - Relatedly, I changed

non-deployment argumentto the more clunky but specificshouldn't just because we can -

vulnerable populationtodifferential impactto capture how impacts are unevenly distributed -

'big tech'totech industrybc other equally evil companies like Uber are not technically included, and it would align with other-industrycodes we have - Is

common goodalso meant to include public good? We don’t have a code currently for “public interest/the public/etc” -

forming a coalition: do we have a code for when groups/people come together asstrategies of pushing back? it’s not quite the same thing asconnecting people - Having read through the report, I think it’s important to keep track of human vs. machine abilities. So I created

human ability(which nests things likeability to explainanddetecting nuance) andmachine ability

Quick memo of some of the codes I’m working through this week

Codes about power

- I’m trying to stick to

controlfor any vertical/oppressive interpretations of power. Need more robust set of codes to capture: controlling behaviourexploitative labor practicesdigital divide

Codes about institutional change

strategies of pushing back-

driving incentivewhich co-occurs with codes likefinancial incentiveandexternal pressure(might be better to re-name as ‘reputational damage’?) creating more problems-

*'holding to a higher threshold': this idea that public institutions should be held to a higher threshold??? halp

Codes about impact of tech

- still trying to capture what happens when, even with accurate and fair design processes,

potential harmandunintended consequenceswill take place becaause technologies will be*'interacting dynamically'with the social world

Making Code Review Meetings more Streamlined

at our meeting today @katejsim and I discussed ways to make out bi-weekly meetings more structured so that we are all on the same page and don’t have to go over time as much.

(1) flag codes ahead of time that we are unsure about or want to discuss during the meeting. Post these here before meetings.

(2) review codes by thematic area rather than by order of occurrence

(3) if you are working on certain thematic/conceptual areas, flag/describe them here before meetings if it will help contextualise your codes.

(4) review codes using the three categories we established:

(a) salience: does the code meaningfully capture the essence of what is being said?

- ex: participants are discussing whether something is, e.g., machine learning:

defining terminology

(b) explicitness: does the code make explicit what is going on?

- ex: interviews pushing back on dominant ways of framing an issue:

nuancing the debateandreframing priorities

(c ) dynamism: does the code follow established convention and is it clear enough to work in different threads?

*ex: if we already have an established convention for action-state codes, e.g. being practical, being strategic, stick to that format. These kinds of codes are broad enough so that they will be usable across different threads, but concrete enough so that they aren’t vague (as opposed to pragmatic or strategic).

Prior to bi-weekly meeting on Monday, codes I’d like to discuss:

Efficiency codes: For whatever reason, we had two different efficiency codes, so I went through the annotations and specified them:

-

doing things efficiently: this was mia’s code that had the definition “good use of time and energy.” Made it into a gerund to be more precise, should be used for codes about doing things well. I’m wondering if we should just make thisdoing things EFFECTIVELYto make a distinction? Speaking of, didn’t we have an effectiveness code?? -

prioritising efficiency: this was also initiallyefficiency. This should be used for when individuals and groups prioritise cost efficiency above other values -

'beyond efficiency': for critiques and pushbacks of the code above

History codes:

-

understanding history: we might want to eventually merge this withconsidering larger context repeating the past

Social change codes:

- added

creative solution - added

placing external pressure - added

creating more problemsfor when doing something actually causes more issues - reminder that I have

differential impactto capture how actions impact different people differently. we currently havevulnerable populationsbut i take issue with this bc it doesn’t help GR show who is made vulnerable and why. I thinkdifferential impactand having it co-occur with in/justice codes likemigrationorharassmentwill be better. thoughts?

Policy codes:

- At some point, we need to go over policy codes. Right now, we have overconcentrated codes for

regulationpolicyand we don’t really capture people’s attitudes toward or evaluation of policy; we also have lumped together most interventions intoimagining alternatives. - added

*attitudes about policyas a placeholder, but this needs to become analytical, not sure how

AI codes cont’d (see previous coding memos)

-

*implicating practitioners: This needs help. Doesn’t quite capture how actions implicate the people driving the action -

*holding to a higher threshold': need to capture how, for example, people hold value-driven entities (like public institutions) to a higher standard than commercial ones -

treating like a human: for capturing salient moments when participants personify AI

This is excellent and super helpful, @katejsim, thank you! Will add my stuff over the weekend

hello!

I recently created the code language ideologies which is a specific concept in linguistics, and which is perhaps too specific for our coding work (so I will change it), but it did make me think about the codes we are creating around stance and appraisal.

language ideologies describe the ways in which people evaluate ways of speaking and the, often normative, sets of beliefs people have around the purpose and function of language in society. In that vein, I think our current codebook could benefit from more codes that capture the various ways in which people are (a) evaluating technologies and (b) how they are positioning themselves both in relationship to these technologies, as well as the debates around them.

We have a lot of codes around what people are doing in relationship to tech: building alternatives, nuancing, defining, asking, connecting, coordinating etc. But I think we can build on the appraisal codes more and define a clearer ontology for these: how do people feel about XYZ and how are they positioning themselves in relationship to ABC?

By this I mean affective or emotive codes life worries and optimism, but also codes that capture more how people and thinking about/appraising tech, e.g. potential harm, prohibitively expensive, safety concerns, feasibility, limiting factor, unintended consequences. so, codes that link people’s evaluations and emotional or personal responses to the given topic.

I also think our sense of codes are great, we also have a number of codes around being, which are helpful.

When I consider more meta codes like imagining the future/alternatives or defining terminology or considering larger context, I think about why people are doing that. How are people evaluating and assessing what is working/not working with current systems and technologies?

Just wanted to put this here as something to think about. Happy to discuss!

I love this idea and I think it’s really, really great. Let’s prioritise and discuss tomorrow too.

@nadia has pointed out that it’s weird that safety and security (and even cybersecurity) don’t tie to violence or any codes like that, despite both @katejsim and Corinne’s posts. And when you look at the ego network of violence, it doesn’t tie to anything technological. Similarly, there are no connections to safety that index anything like Kate’s research. Can we a) revisit Kate’s original post to see what’s going on there with the coding, if we are missing any systems or safety codes and b) review the cluster of codes around safety, violence, etc? Flagging for next meeting.

yes, I’ve noticed this too. I reckon this might be as a result of having too many specific violence codes (i.e., racism or sexual assault) and not also coding them with values like safety. We should establish how we want to do this.

I also want to track content moderation and regulation, relatedly, because I think they are potentially breaking up our free speech and hate speech codes from each other (if we code too much with regulation). Note for you when you get to regulation, Kate

@nadia: Interesting would be to see nuanced presence or distinction between sources of financial sustainability/financing: Private sector, for profit, public sector, nonprofit.

Also; who are the key players/ market makers/ funders/ customers/users

on topic of work, livelihoods, business

So another thing I notice around SSNA is that the relationships between governance, politics and democracy is unclear. I am thinking specifically about power - where it lies, how it works in practice (who are the power players)

it may well be @amelia that we need to come at this/ look in parallel with some proxy space where debate/power struggle is playing out e.g in the context of AI or Identities.

Anothr question I have is how Justice plays out when in relation to control and freedom. Also, it is odd that AI is missing from this as many issues to do with freedom and control, agency, social (justice) have popped up when discussing something to do with AI and automated systems

6. Freedom and control

Individuals:

Reconciliation of data governance with user control over data.

Chapter Topic Tag: freedom-and-control

Relevant Ethno codes:

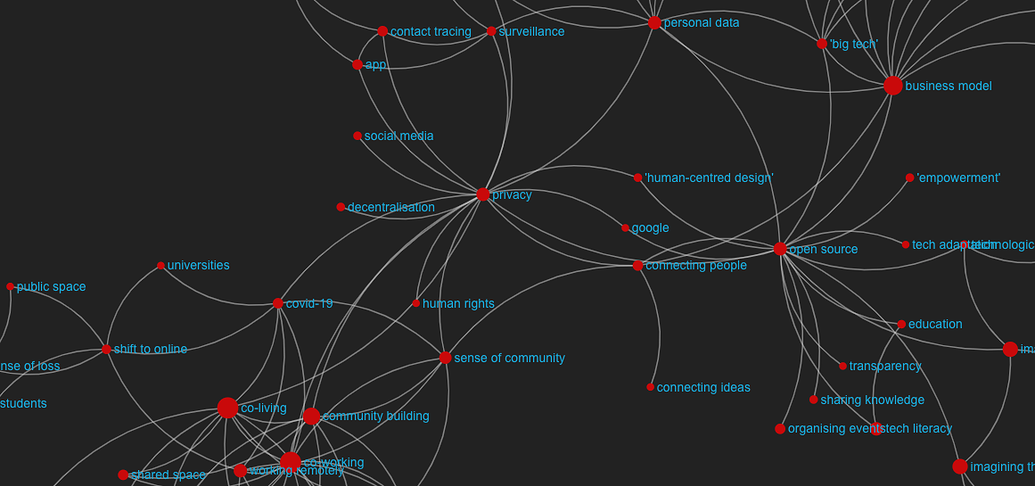

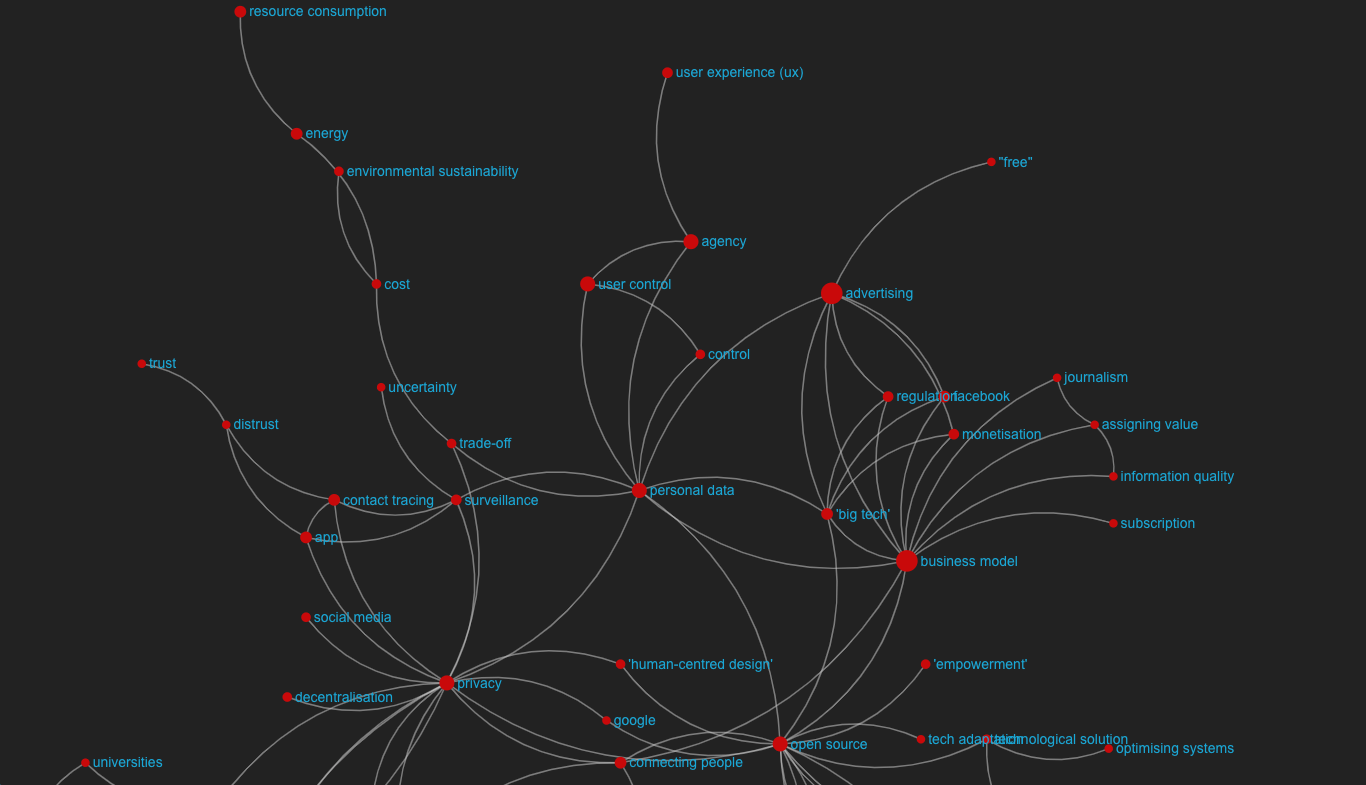

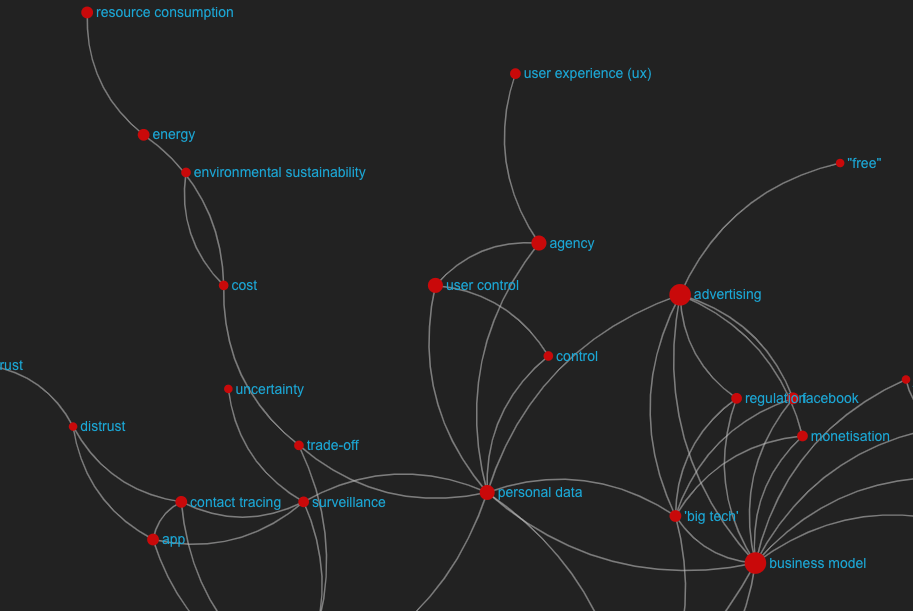

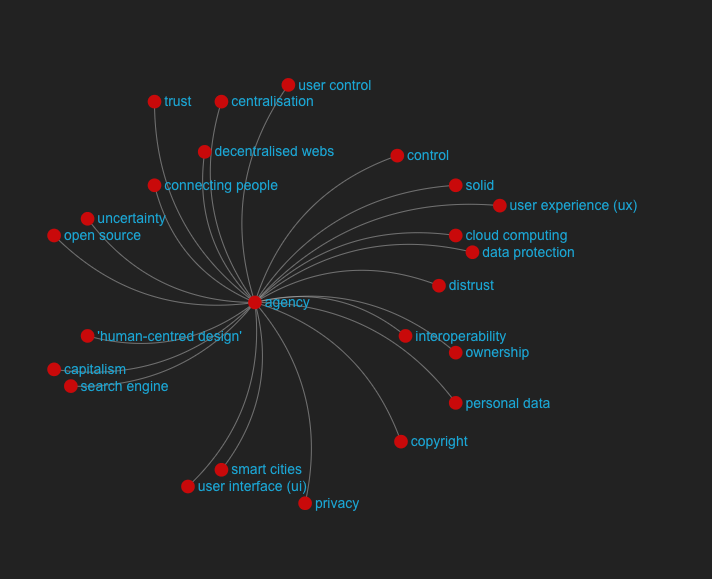

This is a strong theme in our SSNA. We have the codes control , user control , and agency which are all useful to track and visualise. They are tied to personal data , advertising , big tech , and the idea of a trade-off , which comes up frequently and is a very useful code to visualise and track when talking about control, freedom, and agency. People feel uncertainty and distrust when it comes to technologies of surveillance that use their personal data . This is tied to regulation , and widespread belief that individualising responsibility is a problem when it comes to control over personal data — that governments are responsible for regulating big tech companies, and putting that onus on individuals is not right. There is also a trade-off between user control/agency and user experience , mapping on to a sense that having to constantly make decisions about one’s own data (like via GDPR checks on every website) diminishes user experience and has questionable positive effects on user control/agency.

SSNA

so @katejsim it seems there is some disconnect between AI, Agency, Control, Justice, Freedom, Optimisation, Automation - I would expect to see some kind of arc there

A few reasons. In a rush atm so apologies for the brevity/typos, FYI.

The quick and most obvious answer is that higher-level value codes like agency, control, justice have yet to be cleaned up. So you’re seeing how those values emerging in discussions that span across a wide range of topics. @amelia and I’ll be working on creating a more robust set of emotion codes that will enrich these value codes.

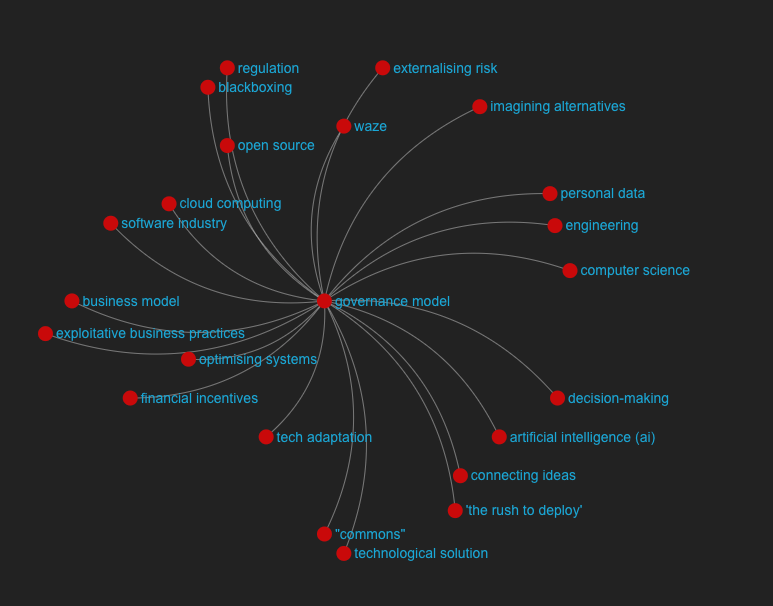

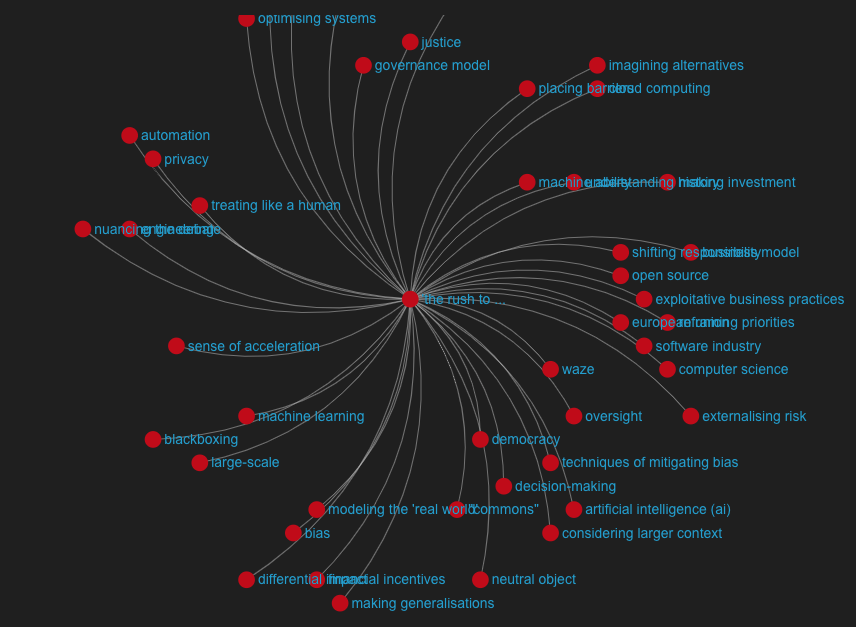

Re: artificial intelligence code operates like a parent code because so much of the discussion around AI does span across a range of topics like business model and oversight to 'the rush to deploy' and encoding values. This is why you’ll get the kinds of codes you want to see if you follow those, rather than ai. For example, 'the rush to deploy' code co-occurs with these really interesting codes that tell stories about business and incentive sturctures in place (exploitative business practices, software industry, externalising risk) and the challenges of computationally representing the real world (modelling the real world making generalisations considering larger context decision-making techniques of mitigating bias)

super interesting + ,qkes cleqr ze qre going to need a lot of time and many conversations to lay this all out then synthesise into conclusions and recommendations, then narrativise into something that works for people with adhd